- 記事作成日:2022/12/04

情報

| 名前 | URL |

|---|---|

| Github | https://github.com/grafana/k6 |

| 公式サイト | https://k6.io/ |

| デモサイト | |

| 開発母体 | Grafana Labs |

| version | 0.41.0 |

| 言語 | Go |

| 価格 | 無料 |

| ライセンス | AGPL-3.0 license |

何ができるもの?

- JavaScript ES2015/ES6 で負荷試験のシナリオが書ける

- 負荷試験、ブラウザテスト、カオス・レジリエンステスト、

この辺りを基本方針としているようです。 1. シンプルなテストは、テストをしないよりも良い 1. 負荷テストは目的志向であるべき 1. 開発者による負荷テスト 1. 開発者の経験は非常に重要 1. プレプロダクション環境での負荷テスト

また JS でシナリオをかけますが、実装自体はパフォーマンス向上のため Go で書かれています。

クラウド版もある。 - app.k6.io - Performance testing for developers, like unit-testing, for performance

対応プロトコル

- HTTP/1.1

- HTTP/2

- WebSockets

- gRPC

ブラウザ操作を記録してテストを作成する

Chrome や Firefox の extenstion を入れることでブラウザ操作を記録してテストを作成することができます。

利用シーン

- 負荷テストをしたい場合(JS でかけるので、敷居は低い)

- ブラウザテストをしたい場合

- シングルバイナリ+CLI で手軽に動かせるので CI に組み込みたい場合

登場背景

by DeepL

Grafana k6 はオープンソースの負荷テストツールで、エンジニアリングチームにとってパフォーマンステストを簡単にし、生産性を高めます。

k6を使用すると、システムの信頼性とパフォーマンスをテストし、パフォーマンスの低下や問題を早期に発見できます。k6は、耐障害性とパフォーマンスの高い、拡張性のあるアプリケーションの構築を支援します。

所感

- シングルバイナリでとても使いやすい

- JS でかけるのでシンプルにかける

- JS でかけるが実行環境は Go なのでパフォーマンスが劣化しない

- レポートについては機能がないので、外部ツールで頑張る必要あり

- k8s と組み合わせれば分散できるのでパフォーマンスも出る

使い方

インストール

brew install k6

チュートリアル準備

まずは kind で k8s クラスタ準備

kind で構築した Kubernetes クラスタ内の NodePort にローカルから接続する を参考に NodePort で起動する

# k8s クラスタ作成

❯ kind create cluster --name 1 --config <(cat << EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30599

hostPort: 8000

- role: worker

EOF

)

Creating cluster "1" ...

✓ Ensuring node image (kindest/node:v1.25.3) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-1"

You can now use your cluster with:

kubectl cluster-info --context kind-1

# nginx を起動

❯ kubectl create deployment nginx --image=nginx

❯ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx

name: nginx-svc

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

nodePort: 30599

selector:

app: nginx

type: NodePort

EOF

疎通確認

❯ curl localhost:8000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

チュートリアル:負荷テスト

import http from 'k6/http';

import { sleep } from 'k6';

export default function () {

http.get('http://localhost:8000');

sleep(1);

}

❯ k6 run script.js

/\ |‾‾| /‾‾/ /‾‾/

/\ / \ | |/ / / /

/ \/ \ | ( / ‾‾\

/ \ | |\ \ | (‾) |

/ __________ \ |__| \__\ \_____/ .io

execution: local

script: script.js

output: -

scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop):

* default: 1 iterations for each of 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

running (00m01.0s), 0/1 VUs, 1 complete and 0 interrupted iterations

default ✓ [======================================] 1 VUs 00m01.0s/10m0s 1/1 iters, 1 per VU

data_received..................: 853 B 842 B/s

data_sent......................: 80 B 79 B/s

http_req_blocked...............: avg=3.46ms min=3.46ms med=3.46ms max=3.46ms p(90)=3.46ms p(95)=3.46ms

http_req_connecting............: avg=354µs min=354µs med=354µs max=354µs p(90)=354µs p(95)=354µs

http_req_duration..............: avg=7.37ms min=7.37ms med=7.37ms max=7.37ms p(90)=7.37ms p(95)=7.37ms

{ expected_response:true }...: avg=7.37ms min=7.37ms med=7.37ms max=7.37ms p(90)=7.37ms p(95)=7.37ms

http_req_failed................: 0.00% ✓ 0 ✗ 1

http_req_receiving.............: avg=100µs min=100µs med=100µs max=100µs p(90)=100µs p(95)=100µs

http_req_sending...............: avg=57µs min=57µs med=57µs max=57µs p(90)=57µs p(95)=57µs

http_req_tls_handshaking.......: avg=0s min=0s med=0s max=0s p(90)=0s p(95)=0s

http_req_waiting...............: avg=7.22ms min=7.22ms med=7.22ms max=7.22ms p(90)=7.22ms p(95)=7.22ms

http_reqs......................: 1 0.986544/s

iteration_duration.............: avg=1.01s min=1.01s med=1.01s max=1.01s p(90)=1.01s p(95)=1.01s

iterations.....................: 1 0.986544/s

vus............................: 1 min=1 max=1

vus_max........................: 1 min=1 max=1

きちんとログも飛んできていますね。

❯ kubectl logs -f nginx-76d6c9b8c-qmnpf ... 172.21.0.3 - - [04/Dec/2022:01:44:29 +0000] "GET / HTTP/1.1" 200 615 "-" "k6/0.41.0 (https://k6.io/)" "-"

続いて 30秒間 10 VUs(Virtual Users) で負荷をかけてみます。 ※要するに 10rps で 30秒間負荷をかける

❯ k6 run --vus 10 --duration 30s script.js

/\ |‾‾| /‾‾/ /‾‾/

/\ / \ | |/ / / /

/ \/ \ | ( / ‾‾\

/ \ | |\ \ | (‾) |

/ __________ \ |__| \__\ \_____/ .io

execution: local

script: script.js

output: -

scenarios: (100.00%) 1 scenario, 10 max VUs, 1m0s max duration (incl. graceful stop):

* default: 10 looping VUs for 30s (gracefulStop: 30s)

running (0m30.4s), 00/10 VUs, 300 complete and 0 interrupted iterations

default ✓ [======================================] 10 VUs 30s

data_received..................: 256 kB 8.4 kB/s

data_sent......................: 24 kB 790 B/s

http_req_blocked...............: avg=57.42µs min=0s med=3µs max=1.68ms p(90)=7µs p(95)=10.04µs

http_req_connecting............: avg=18.05µs min=0s med=0s max=618µs p(90)=0s p(95)=0s

http_req_duration..............: avg=11.14ms min=859µs med=11.65ms max=28.71ms p(90)=17.74ms p(95)=23.29ms

{ expected_response:true }...: avg=11.14ms min=859µs med=11.65ms max=28.71ms p(90)=17.74ms p(95)=23.29ms

http_req_failed................: 0.00% ✓ 0 ✗ 300

http_req_receiving.............: avg=85.3µs min=7µs med=28µs max=4.94ms p(90)=91.2µs p(95)=200.44µs

http_req_sending...............: avg=17.42µs min=2µs med=11µs max=762µs p(90)=27µs p(95)=36.09µs

http_req_tls_handshaking.......: avg=0s min=0s med=0s max=0s p(90)=0s p(95)=0s

http_req_waiting...............: avg=11.04ms min=826µs med=11.49ms max=28.7ms p(90)=17.67ms p(95)=23.22ms

http_reqs......................: 300 9.87962/s

iteration_duration.............: avg=1.01s min=1s med=1.01s max=1.02s p(90)=1.01s p(95)=1.02s

iterations.....................: 300 9.87962/s

vus............................: 10 min=10 max=10

vus_max........................: 10 min=10 max=10

300 9.87962/s なので概ね 10 rps 出てそうですね。

段階的な負荷をかける

このシナリオでは - 30秒間かけて徐々に 20 VUs にしていく - 1分30秒かけて 10 VUs に減らしていく - 20秒かけて 0 VUs に減らしていく

import http from 'k6/http';

import { check, sleep } from 'k6';

export const options = {

stages: [

{ duration: '30s', target: 20 },

{ duration: '1m30s', target: 10 },

{ duration: '20s', target: 0 },

],

};

export default function () {

const res = http.get('http://localhost:8000');

check(res, { 'status was 200': (r) => r.status == 200 });

sleep(1);

}

❯ k6 run stages.js

/\ |‾‾| /‾‾/ /‾‾/

/\ / \ | |/ / / /

/ \/ \ | ( / ‾‾\

/ \ | |\ \ | (‾) |

/ __________ \ |__| \__\ \_____/ .io

execution: local

script: stages.js

output: -

scenarios: (100.00%) 1 scenario, 20 max VUs, 2m50s max duration (incl. graceful stop):

* default: Up to 20 looping VUs for 2m20s over 3 stages (gracefulRampDown: 30s, gracefulStop: 30s)

running (2m20.6s), 00/20 VUs, 1523 complete and 0 interrupted iterations

default ✓ [======================================] 00/20 VUs 2m20s

✓ status was 200

checks.........................: 100.00% ✓ 1523 ✗ 0

data_received..................: 15 MB 108 kB/s

data_sent......................: 171 kB 1.2 kB/s

http_req_blocked...............: avg=6.55ms min=1µs med=6µs max=954.17ms p(90)=18µs p(95)=21µs

http_req_connecting............: avg=3.02ms min=0s med=0s max=192.68ms p(90)=0s p(95)=0s

http_req_duration..............: avg=187.78ms min=167.27ms med=181.81ms max=1.41s p(90)=190.16ms p(95)=194.86ms

{ expected_response:true }...: avg=187.78ms min=167.27ms med=181.81ms max=1.41s p(90)=190.16ms p(95)=194.86ms

http_req_failed................: 0.00% ✓ 0 ✗ 1523

http_req_receiving.............: avg=3.35ms min=15µs med=90µs max=535.15ms p(90)=458.2µs p(95)=2.15ms

http_req_sending...............: avg=36.15µs min=4µs med=24µs max=2.58ms p(90)=73µs p(95)=82µs

http_req_tls_handshaking.......: avg=3.46ms min=0s med=0s max=690.28ms p(90)=0s p(95)=0s

http_req_waiting...............: avg=184.39ms min=166.08ms med=181.48ms max=1.23s p(90)=188.94ms p(95)=193.91ms

http_reqs......................: 1523 10.834243/s

iteration_duration.............: avg=1.19s min=1.16s med=1.18s max=2.41s p(90)=1.19s p(95)=1.19s

iterations.....................: 1523 10.834243/s

vus............................: 1 min=1 max=20

vus_max........................: 20 min=20 max=20

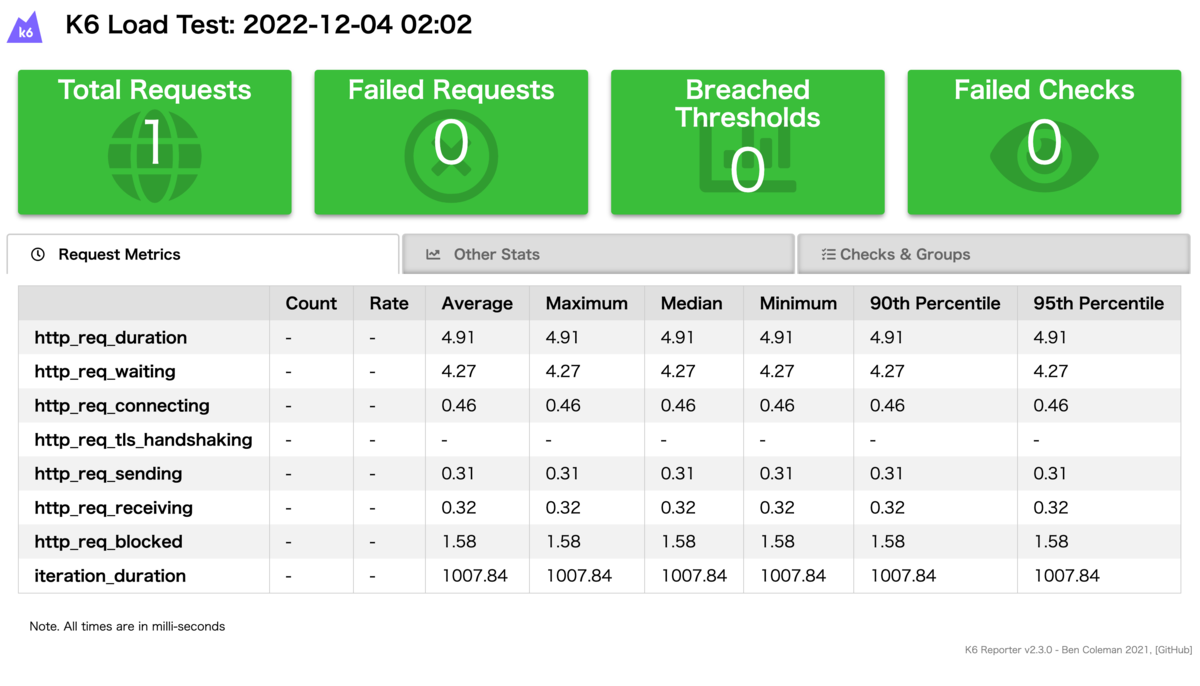

HTML レポートの生成

k6 では用意されていませんが handleSummary 関数を用意すると HTML レポートを生成することができます。

benc-uk/k6-reporter を使ってみます。

import http from 'k6/http';

import { sleep } from 'k6';

import { htmlReport } from "https://raw.githubusercontent.com/benc-uk/k6-reporter/main/dist/bundle.js";

export default function () {

http.get('http://localhost:8000');

sleep(1);

}

export function handleSummary(data) {

return {

"summary.html": htmlReport(data),

};

}

❯ k6 run script_with_report.js

/\ |‾‾| /‾‾/ /‾‾/

/\ / \ | |/ / / /

/ \/ \ | ( / ‾‾\

/ \ | |\ \ | (‾) |

/ __________ \ |__| \__\ \_____/ .io

execution: local

script: script_with_report.js

output: -

scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop):

* default: 1 iterations for each of 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

running (00m01.0s), 0/1 VUs, 1 complete and 0 interrupted iterations

default ✓ [=================================] 1 VUs 00m01.0s/10m0s 1/1 iters, 1 per VU

INFO[0001] [k6-reporter v2.3.0] Generating HTML summary report source=console

❯ ls summary.html

summary.html

リアルタイムの描画については、k6自体では対応していません。 ただしリアルタイムに output する機能だけはあるので外部ツールで取り込み描画すれば良いです。

gRPC でもやってみた

// ./definitions/hello.proto // based on https://grpc.io/docs/guides/concepts.html syntax = "proto2"; package hello; service HelloService { rpc SayHello(HelloRequest) returns (HelloResponse); rpc LotsOfReplies(HelloRequest) returns (stream HelloResponse); rpc LotsOfGreetings(stream HelloRequest) returns (HelloResponse); rpc BidiHello(stream HelloRequest) returns (stream HelloResponse); } message HelloRequest { optional string greeting = 1; } message HelloResponse { required string reply = 1; }

import grpc from 'k6/net/grpc';

import { check, sleep } from 'k6';

const client = new grpc.Client();

client.load(['definitions'], 'hello.proto');

export default () => {

client.connect('grpcbin.test.k6.io:9001', {

// plaintext: false

});

const data = { greeting: 'Bert' };

const response = client.invoke('hello.HelloService/SayHello', data);

check(response, {

'status is OK': (r) => r && r.status === grpc.StatusOK,

});

console.log(JSON.stringify(response.message));

client.close();

sleep(1);

};

問題なく動いている様子。 proto から js コードの生成は k6 実行時に毎回コンパイルしてくれてるのかな??

k6 run grpc.js

/\ |‾‾| /‾‾/ /‾‾/

/\ / \ | |/ / / /

/ \/ \ | ( / ‾‾\

/ \ | |\ \ | (‾) |

/ __________ \ |__| \__\ \_____/ .io

execution: local

script: grpc.js

output: -

scenarios: (100.00%) 1 scenario, 1 max VUs, 10m30s max duration (incl. graceful stop):

* default: 1 iterations for each of 1 VUs (maxDuration: 10m0s, gracefulStop: 30s)

INFO[0000] {"reply":"hello Bert"} source=console

running (00m01.8s), 0/1 VUs, 1 complete and 0 interrupted iterations

default ✓ [=================================] 1 VUs 00m01.8s/10m0s 1/1 iters, 1 per VU

✓ status is OK

checks...............: 100.00% ✓ 1 ✗ 0

data_received........: 5.8 kB 3.3 kB/s

data_sent............: 712 B 403 B/s

grpc_req_duration....: avg=168.28ms min=168.28ms med=168.28ms max=168.28ms p(90)=168.28ms p(95)=168.28ms

iteration_duration...: avg=1.76s min=1.76s med=1.76s max=1.76s p(90)=1.76s p(95)=1.76s

iterations...........: 1 0.565688/s

vus..................: 1 min=1 max=1

vus_max..............: 1 min=1 max=1

k6 operator を使ってみる

❯ git clone https://github.com/grafana/k6-operator && cd k6-operator Cloning into 'k6-operator'... remote: Enumerating objects: 1171, done. remote: Counting objects: 100% (610/610), done. remote: Compressing objects: 100% (316/316), done. remote: Total 1171 (delta 415), reused 383 (delta 279), pack-reused 561 Receiving objects: 100% (1171/1171), 693.39 KiB | 3.85 MiB/s, done. Resolving deltas: 100% (593/593), done. ❯ make deploy /Users/mypc/go/bin/controller-gen "crd:trivialVersions=true,crdVersions=v1" rbac:roleName=manager-role webhook paths="./..." output:crd:artifacts:config=config/crd/bases cd config/manager && /Users/mypc/.asdf/shims/kustomize edit set image controller=ghcr.io/grafana/operator:latest /Users/mypc/.asdf/shims/kustomize build config/default | kubectl apply -f - namespace/k6-operator-system created customresourcedefinition.apiextensions.k8s.io/k6s.k6.io created serviceaccount/k6-operator-controller created role.rbac.authorization.k8s.io/k6-operator-leader-election-role created clusterrole.rbac.authorization.k8s.io/k6-operator-manager-role created clusterrole.rbac.authorization.k8s.io/k6-operator-metrics-reader created clusterrole.rbac.authorization.k8s.io/k6-operator-proxy-role created rolebinding.rbac.authorization.k8s.io/k6-operator-leader-election-rolebinding created clusterrolebinding.rbac.authorization.k8s.io/k6-operator-manager-rolebinding created clusterrolebinding.rbac.authorization.k8s.io/k6-operator-proxy-rolebinding created service/k6-operator-controller-manager-metrics-service created deployment.apps/k6-operator-controller-manager created

裏側はこうなってる様子

❯ cat k8s-test.js

import http from 'k6/http';

import { check } from 'k6';

export const options = {

stages: [

{ target: 200, duration: '30s' },

{ target: 0, duration: '30s' },

],

};

export default function () {

const result = http.get('https://test-api.k6.io/public/crocodiles/');

check(result, {

'http response status code is 200': result.status === 200,

});

}

❯ kubectl create configmap crocodile-stress-test --from-file k8s-test.js

configmap/crocodile-stress-test created

❯ kubectl apply -f <(cat << EOF

apiVersion: k6.io/v1alpha1

kind: K6

metadata:

name: k6-sample

spec:

parallelism: 4

script:

configMap:

name: crocodile-stress-test

file: test.js

EOF

)

k6.k6.io/k6-sample created

しばらく放置したらテストが終わってました。

❯ kubectl get pod

NAME READY STATUS RESTARTS AGE

k6-sample-1-bpnj6 0/1 Completed 0 4m20s

k6-sample-2-l8rfx 0/1 Completed 0 4m19s

k6-sample-3-sn8jn 0/1 Completed 0 4m19s

k6-sample-4-qlnv7 0/1 Completed 0 4m19s

k6-sample-initializer-h4lts 0/1 Completed 0 4m30s

k6-sample-starter-rcd7w 0/1 Completed 0 4m10s

nginx-76d6c9b8c-qmnpf 1/1 Running 0 67m

❯ kubectl logs k6-sample-1-bpnj6

✓ http response status code is 200

checks.........................: 100.00% ✓ 1733 ✗ 0

data_received..................: 1.7 MB 29 kB/s

data_sent......................: 226 kB 3.8 kB/s

http_req_blocked...............: avg=11.06ms min=8.79µs med=33.45µs max=471.54ms p(90)=62.04µs p(95)=86.75µs

http_req_connecting............: avg=5.32ms min=0s med=0s max=197.37ms p(90)=0s p(95)=0s

http_req_duration..............: avg=888.68ms min=173.88ms med=246.35ms max=3.49s p(90)=2.47s p(95)=2.8s

{ expected_response:true }...: avg=888.68ms min=173.88ms med=246.35ms max=3.49s p(90)=2.47s p(95)=2.8s

http_req_failed................: 0.00% ✓ 0 ✗ 1733

http_req_receiving.............: avg=531.54µs min=113.75µs med=460.62µs max=5.84ms p(90)=841.12µs p(95)=929.45µs

http_req_sending...............: avg=144.84µs min=38.95µs med=131.83µs max=1.93ms p(90)=212.34µs p(95)=243.32µs

http_req_tls_handshaking.......: avg=5.67ms min=0s med=0s max=275.94ms p(90)=0s p(95)=0s

http_req_waiting...............: avg=888ms min=173.27ms med=246.01ms max=3.49s p(90)=2.47s p(95)=2.79s

http_reqs......................: 1733 28.809799/s

iteration_duration.............: avg=900.84ms min=174.88ms med=269.72ms max=3.72s p(90)=2.48s p(95)=2.8s

iterations.....................: 1733 28.809799/s

vus............................: 1 min=0 max=50

vus_max........................: 50 min=50 max=50

再度テストを実行する場合は、k6-sampleを削除してから再度作成する必要があります。

おまけ: どうやって Go から JavaScript を実行するのか

dop251/goja という Go で書かれた JavaScript の実行環境があり、k6ではこれを使って JavaScript を実行しています。 そう考えると k6 は結構シンプルな作りになっていそうですね。(output を html にするのも外部ツール任せだし、リアルタイム出力をするわけでもないし)

やってることはテストの実施、結果の管理、シナリオの増減による Go routine の管理などでしょうか。